FEBRUARY 18 2025

Why open source is even more important in an AI-powered world

Open source AI tools enable greater flexibility and innovation when building AI-powered apps.

Generative AI is set to fundamentally change how apps are built over the next decade. Whether it's the latest developments with large language models or traditional machine learning approaches, incorporating models into an app allows for unmatched personalization, discovery, and productivity. Choosing the right approach for integrating AI into your business can influence your operational efficiency and innovation capacity.

Why open source is a core tenet at Hypermode

Hypermode's mission is to democratize creativity and innovation through AI. The AI landscape is evolving at an unprecedented pace, making flexibility a necessity rather than a luxury. We fundamentally believe that open source developer tools and even AI models provide this agility, allowing teams to mix and match components in their stack as new advancements emerge.

The recent launch of Deepseek–an open source AI reasoning model–has clearly shown how open source technology empowers developers to experiment, swap out models, and optimize workflows without being constrained by proprietary restrictions. There is an ongoing expansion of high-performing and differentiated model offerings to help customers, and we're seeing that customers want the ability to incrementally adopt these new models and the flexibility to choose the right one only after understanding the outputs. The ability to compare models transparently empowers teams to choose the precise capabilities that best service their unique needs.

Furthermore, hosting open source models in-house or on private cloud infrastructure enhances data privacy–a critical concern for industries handling sensitive information. Instead of sending data to external APIs, teams can process it securely within their own environments, ensuring compliance with regulations and minimizing risk.

Beyond flexibility and privacy, open source AI integrates seamlessly with popular frameworks, streamlining development workflows. Whether building with LangChain or Modus, open source models and tools fit naturally into existing ecosystems. This interoperability removes technical barriers, making it easier to build, deploy, and scale AI apps efficiently. As AI continues to evolve, the ability to customize and control the full stack will be a defining factor for teams that want to stay competitive.

Adding AI-powered features to an example app

Building AI-powered features can be a challenge, but with the right framework, it becomes much easier.

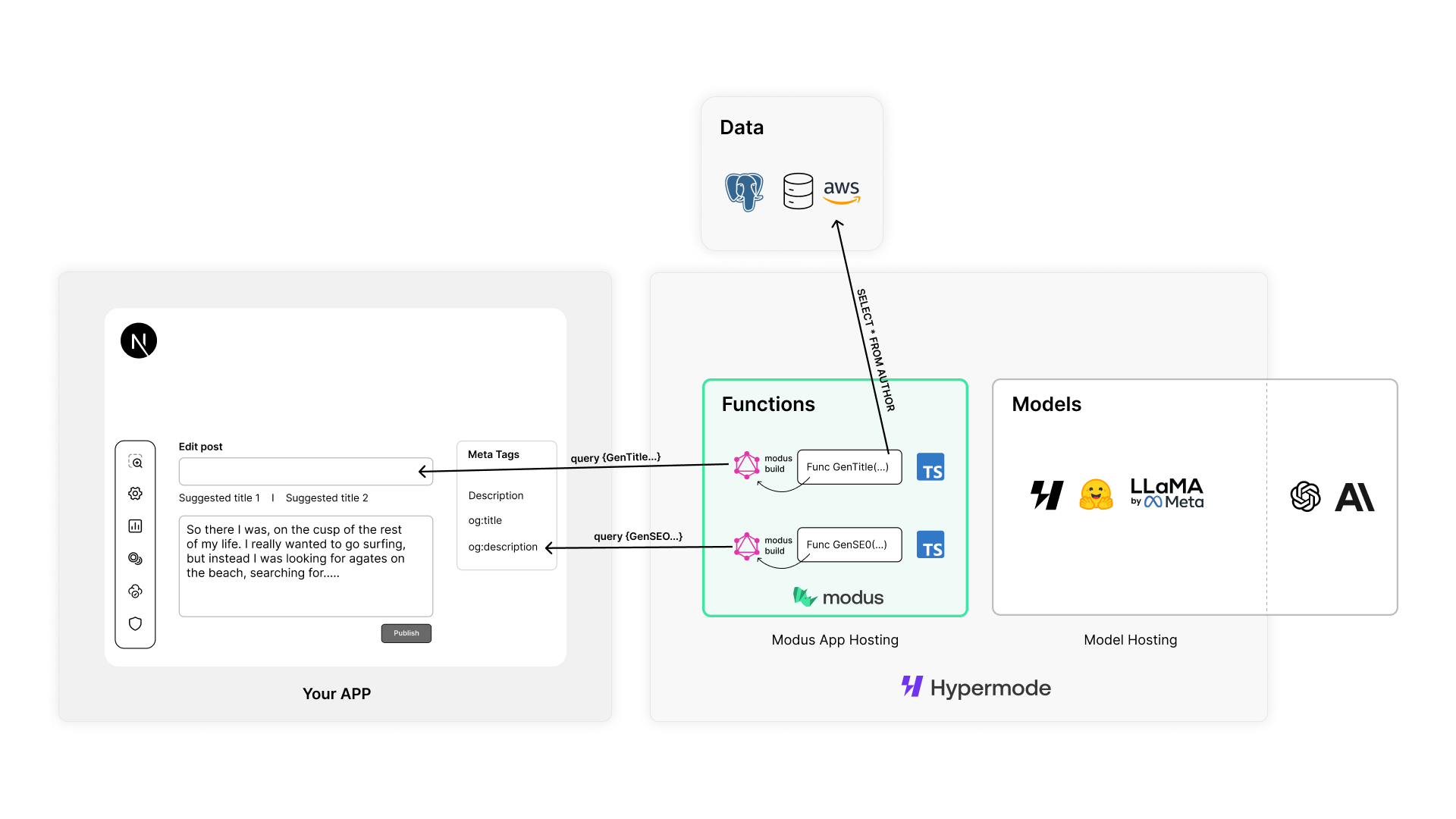

Let’s walk through how to integrate large language models (LLM) into a web or mobile app using Modus, an open source intelligent API framework. Modus is designed to be incrementally adoptable, which is perfect for adding AI-backed features to an existing app.

For this example, we'll enhance a blogging platform with AI-powered features. Specifically, we will:

- Generate suggested blog post titles based on content and author style

- Create SEO-friendly meta descriptions automatically

- Pull internal business data (such as author details) to customize AI-generated content

Setting up the project

1. Install the Modus command-line tool

npm install -g @hypermode/modus-cliMake sure you have Node.js installed with v22 or higher.

2. Initialize a new Modus project

modus newThis command prompts you to choose between Go and AssemblyScript as the language for your app. In this example, we use AssemblyScript. Modus then creates a new directory with the necessary files and folders for your app. You'll also be asked if you'd like to initialize a Git repository.

3. Define API endpoints, models, & data connections

Open the modus.json manifest to define API endpoints, models and data

connections.

Endpoints defines the GraphQL endpoint for your Modus project. This is where your backend functions are exposed. We're using GraphQL as the default API in this example.

To integrate an LLM, we configure the model provider in modus.json. In this

example, we use the open source Llama model hosted on Hypermode. The platform

makes it easy for you to test and swap different models until you find the best

model for your specific use case.

To take advantage of Hypermode hosted models we'll install and authenticate with

Hypermode by first installing the Hyp command line tool with

npm install -g @hypermode/modus-cli and then hyp login to link our local

development environment with Hypermode.

Finally, establish a connection to a Postgres database backing the blogging platform to retrieve author details.

4. Implement AI-powered functions

Since Modus is a serverless framework, the functions we write are exposed as fields in a generated GraphQL API. This example includes four functions:

generateTextgenerateSEOgetAuthorByNamegenerateTitle

These functions demonstrate how to query a Postgres database and integrate its results with an AI model using Modus—a basic RAG workflow where we are chaining together internal data and an invocation of the LLM.

- Define a function

generateText()to process user prompts and return AI-generated content by invoking the LLM - Create

generateSEO()to produce SEO-optimized meta tags based on our blog content - Write

getAuthorByName()to fetch author details from Postgres - Implement

generateTitle()to personalize blog titles using the author's bio and post content

5. Test the API

Run modus dev to start a local GraphQL API

To build and run your app locally, this command builds and runs your app locally in development mode and provides you with a URL to access your app's generated API.

We can use Postman to inspect the schema and test the GraphQL API. Modus now also includes an integrated API Explorer that can be used to explore and query the GraphQL API exposed by our Modus app. By querying these AI-powered endpoints we can generate metadata and titles dynamically, which can then be incorporated into the blogging platform’s frontend.

In this example, we've integrated AI-powered features into our blogging app. Now, authors can get:

- AI-generated SEO meta descriptions

- Tailored blog title suggestions

- AI-generated content based on internal business data

This approach shows how an open source framework like Modus simplifies AI integrations by abstracting models, data and functions. The flexibility of changing out different models and ability to quickly connect to various data sources helps developers continue to gain intuition for how and where to leverage AI within their app.

Bringing AI-native apps to life with AWS

With our focus to give developers everything they need to put AI into production, AWS is the natural platform of choice for us with their focus on removing the undifferentiated heavy lifting for organizations of all shapes and sizes. The Hypermode platform is built on Amazon Elastic Kubernetes Service (EKS). Given that the requirements to support AI-native apps are different than traditional deterministic services, our ability to mix a variety of serverless and other AWS tooling allows Hypermode to build a cloud platform for agentic systems.

Many of the tools on the Hypermode platform are open source, enabling developers to leverage these solutions while contributing to their continuous improvement. Specifically, Hypermode maintains:

- Modus is an open source, serverless framework for building agents and AI features. It provides developers with a transparent and extensible framework for building and managing agentic systems and AI-native workflows. Its modular architecture allows builders to orchestrate across diverse systems, making it suitable for various use cases, from CI/CD pipelines to business process automation.

- Dgraph is a high-performance, distributed graph database that stands out for its horizontal scalability and low-latency querying capabilities. Implemented as a distributed system, Dgraph processes queries in parallel to deliver the fastest results, even for the most complex queries. Its multi-tenancy capabilities enable your AI apps to have logically separated knowledge graphs.

- Badger is an embedded, fast and lightweight key-value store designed for simplicity and performance. It's particularly well-suited for apps requiring efficient data storage without the overhead of a full database system.

- Ristretto is a high-performance caching library optimized for efficiency and predictability. It offers a powerful caching solution with features like cost-based eviction policies and consistent performance under heavy load.

Start building today

In the rapidly evolving landscape of gen AI, it's more crucial to have adaptability, quick iteration, and frequent experimentation than it's to have a perfect strategy from the outset. Embracing open source allows you to stay at the forefront of ideating, building, deploying, and operating AI-native apps in production. By actively participating in and contributing to open source projects, Hypermode gives organizations the ability to adapt quickly to changing market demands and deliver cutting-edge solutions for their customers.

We can't wait to see what you build. Get started today:

- GitHub repository of blogging app example: https://github.com/hypermodeinc/modus-recipes/tree/main/modus-press

- Modus documentation: https://docs.hypermode.com/modus/overview

- Dgraph documentation: https://dgraph.io/docs/dgraph-overview/